In the previous post, we introduced the various functions and commands in MongoDB to retrieve your metrics. We also showcased a few out-of-the-box solutions (statsd, OpenTSDB, Prometheus, Cacti) that ship MongoDB metrics directly to some of the popular monitoring and trending tools. Today we will dive a bit deeper into the metrics: group them together and see which ones are the most important ones to keep an eye on.

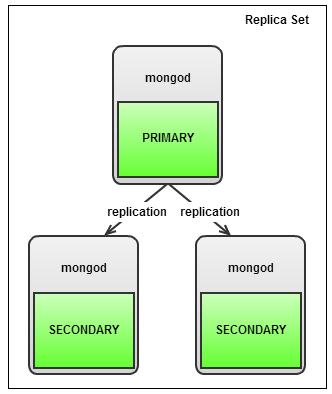

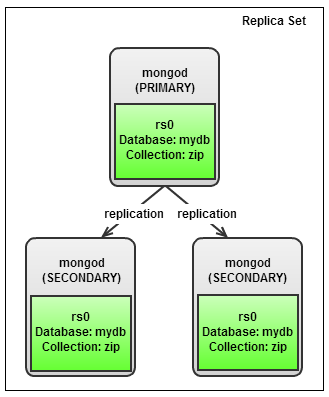

Replication

Oplog

With MongoDB replication, the most important aspect is the oplog. As we described in an earlier blog post about MongoDB configuration, the oplog is comparable to the MySQL binary log. It keeps a history of all transactions in this log file, but in contrary to the MySQL binary log, MongoDB only has one single collection where it stores them. This collection is limited in size. This means that once it is full, old transactions will get purged once a new transaction come in. It will evict the oldest transaction first, so the method used here is FIFO (First In, First Out). Therefore the most important metric to watch is the replication window: the duration of transactions kept in the oplog.

Why is this important? Suppose one of the secondary nodes loses network connectivity with the primary, it will no longer replicate data from the primary. Once it comes back online this secondary node has to catch up with already replicated transactions. It will use the oplog for this purpose. If the secondary node went offline for too long, it can’t use the oplog anymore and a full sync is necessary. A full sync, just like the SST in Galera, is an expensive operation and you would want to avoid this.

mongo_replica_0:PRIMARY> db.getReplicationInfo()

{

"logSizeMB" : 1895.7751951217651,

"usedMB" : 0.01,

"timeDiff" : 11,

"timeDiffHours" : 0,

"tFirst" : "Fri Jul 08 2016 10:56:01 GMT+0000 (UTC)",

"tLast" : "Fri Jul 08 2016 10:56:12 GMT+0000 (UTC)",

"now" : "Fri Jul 08 2016 12:38:36 GMT+0000 (UTC)"

}

As you can see the time difference is already present in the output from the getReplicationInfo function. You can choose to use either the timeDiff in seconds or timeDiffHours in hours here. A side note: this function is only available from the mongo command line tool, so if you want to get this via connecting directly to MongoDB, you will have to get the first and last record from the oplog and calculate the time difference.

If you see the oplog window is becoming (too) short, you can act on this and increase the oplog size for the cluster. Increasing the size of the oplog is a lengthy and painful process where you have to apply the change on every node, one node at a time. See also the MongoDB documentation on this subject.

Replication Lag

Replication lag is very important to keep an eye on: performing read operations on the primary MongoDB node will proxy your request to secondary nodes. MongoDB will only use these secondaries if they don’t lag too far behind. You could bypass this by connecting directly to a secondary node. However, you then need to keep an eye on the lag yourself. If the secondary has replication lag, you risk serving out stale data that already has been overwritten on the primary.

To check the replication lag, it suffices to connect to the primary and retrieve this data from the replSetGetStatus command. In contrary to MySQL, the primary keeps track of the replication status of its secondaries.

A condensed version is seen below:

my_mongodb_0:PRIMARY> db.runCommand( { replSetGetStatus: 1 } )

{

…

"members" : [

{

"_id" : 0,

"name" : "10.10.32.11:27017",

"stateStr" : "PRIMARY",

"optime" : {

"ts" : Timestamp(1466247801, 5),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2016-06-18T11:03:21Z"),

},

{

"_id" : 1,

"name" : "10.10.32.12:27017",

"stateStr" : "SECONDARY",

"optime" : {

"ts" : Timestamp(1466247801, 5),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2016-06-18T11:03:21Z"),

},

{

"_id" : 2,

"name" : "10.10.32.13:27017",

"stateStr" : "SECONDARY",

"optime" : {

"ts" : Timestamp(1466247801, 5),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2016-06-18T11:03:21Z"),

}

],

"ok" : 1

}

You can calculate the lag by simply subtracting the secondary optimeDate (or optime timestamp) from the primary optimeDate. This will give you the replication lag in seconds.

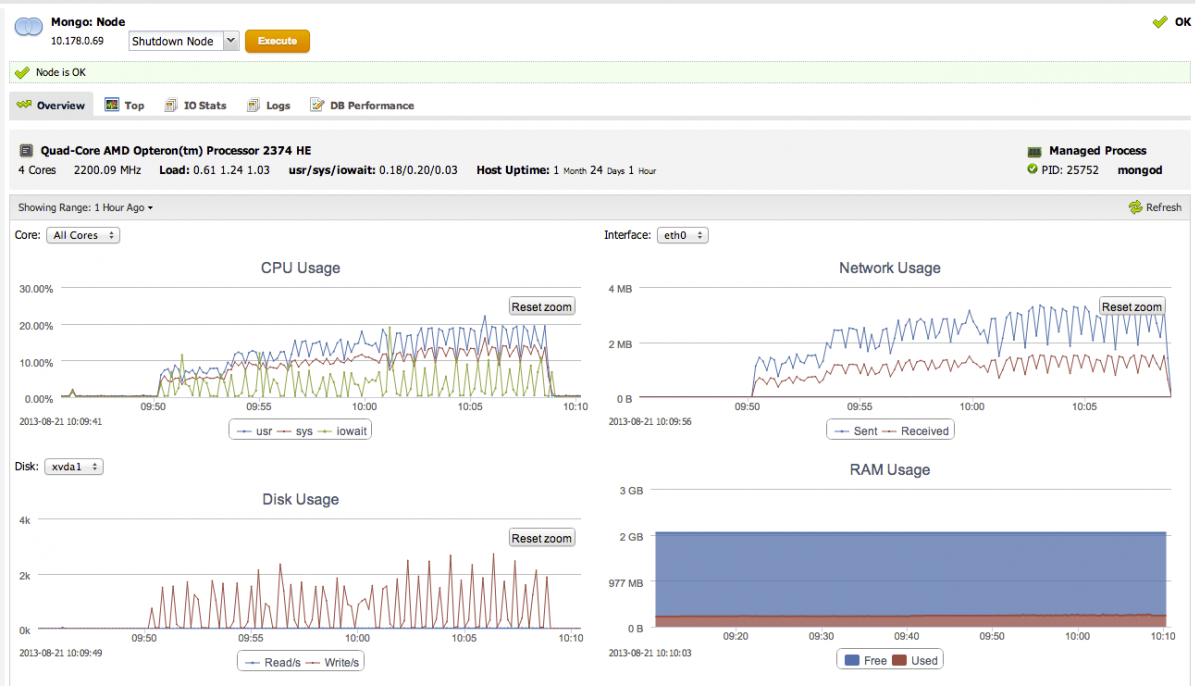

Reasons for replication lag in MongoDB can be either the hardware not able to cope with applying the transactions (CPU/Memory/IO), internal lock contention (storage engine specific) or a deliberately delayed secondary.

Operational metrics

Connection limits

Just like in MySQL, in MongoDB there are also connection and file handler limits. On the server side, MongoDB uses a similar principle to MySQL to handle connections and also supports persistent connections. It also has similar issues like MySQL, once you exhaust the maximum number of connections. The same ulimit problem exists once you exceed your OS limits, and also the same solution applies by raising the hard limits.

On the client side, it is a different story though: most drivers will enable connection pooling by default. This means that not only they will reuse the connections when necessary, but also can use them in parallel if necessary. Still if something is going wrong with your application, this means the client will spawn extra connections to satisfy the need of spare connections in the pool.

In other words: you definitely want to keep an eye on the connections going to your database.

This metric can be found in the serverStatus function:

mongo_replica_0:PRIMARY> db.serverStatus().connections

{ "current" : 25, "available" : 794, "totalCreated" : NumberLong(122418) }

This gives you an accurate number of connections at this very moment and how many are still available. If the number of connections used are going up rapidly, then you know it is time to investigate.

Transactions

In contrary to MySQL, a transaction is a broad term in MongoDB. MongoDB supports atomicity at the document level and this includes the embedded documents inside this document. Writing to a sequence of documents at once will not be atomic as other operations may be written interleaved.

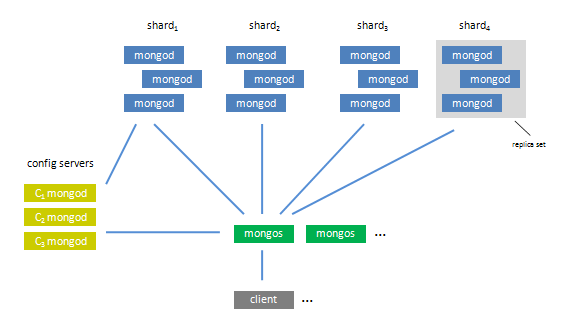

However for performing atomicity on multiple documents, you can provide the $isolated operator. This will cause the other clients requesting data not to see the changes you have made until the write operator has finished. This is not the same as a transaction though: once your write operation has reached an error there will be no rollback. Also the $isolated operator will not work on sharded clusters as it would then have to work across shards.

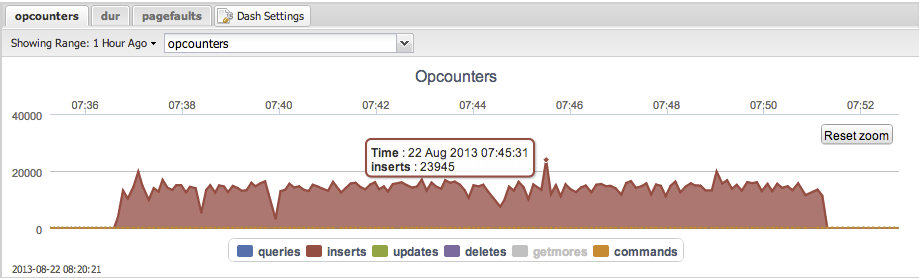

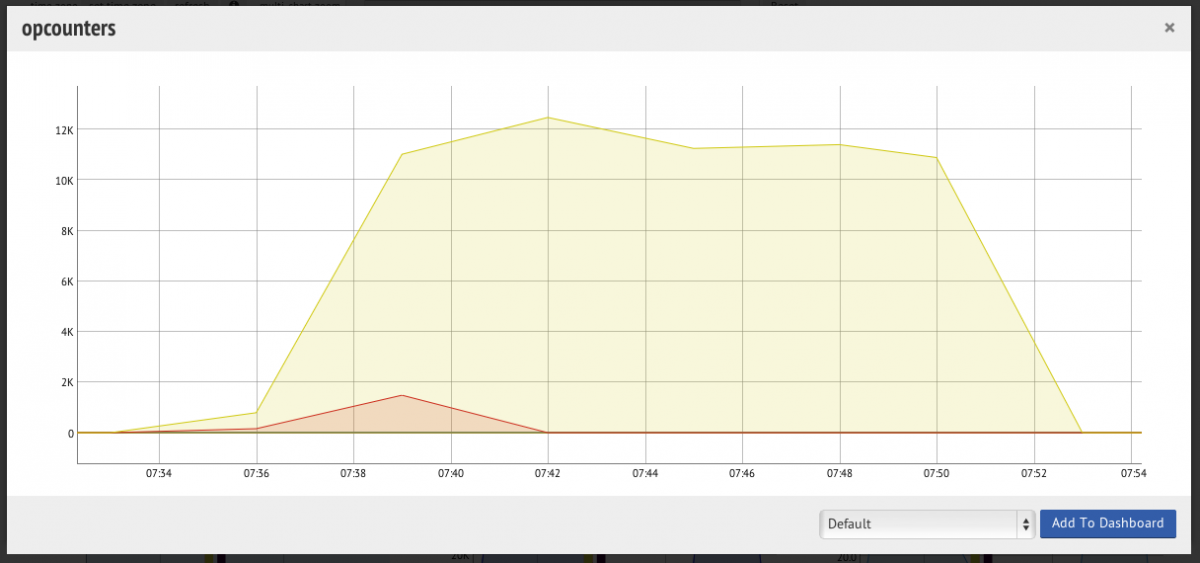

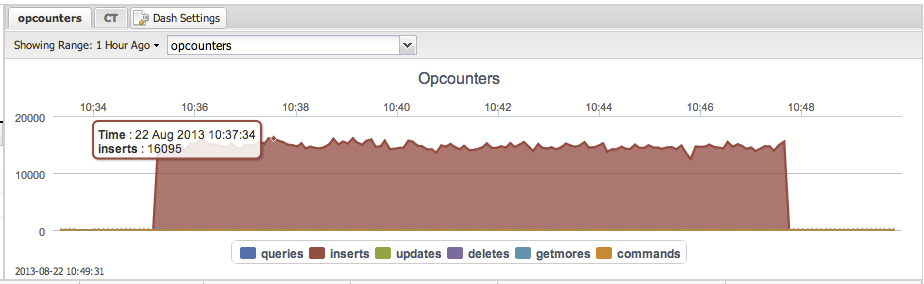

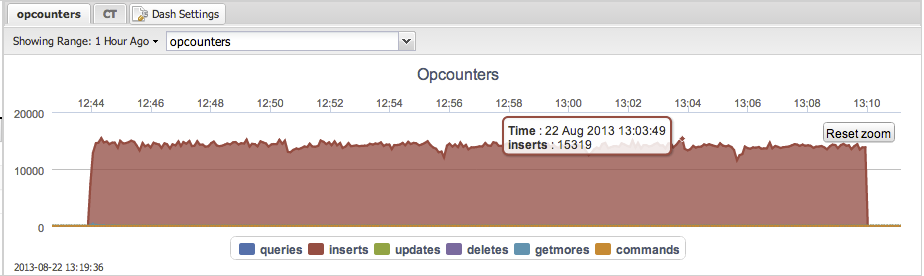

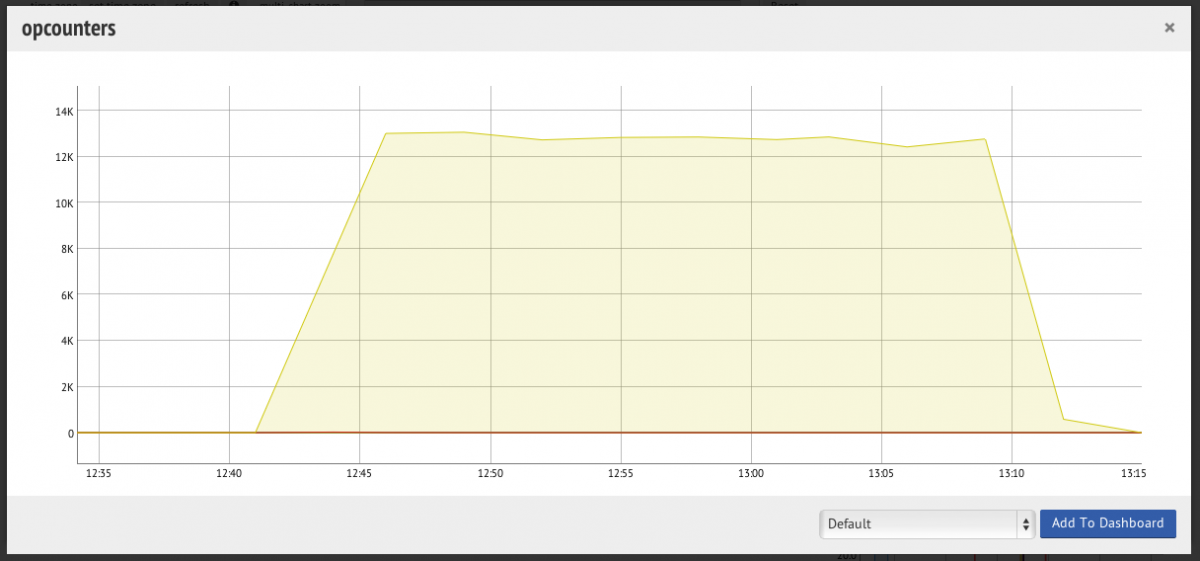

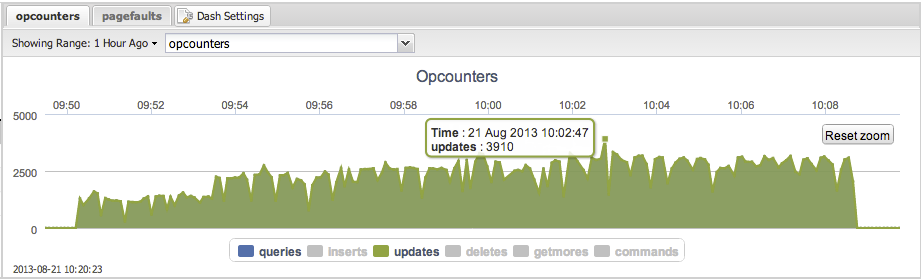

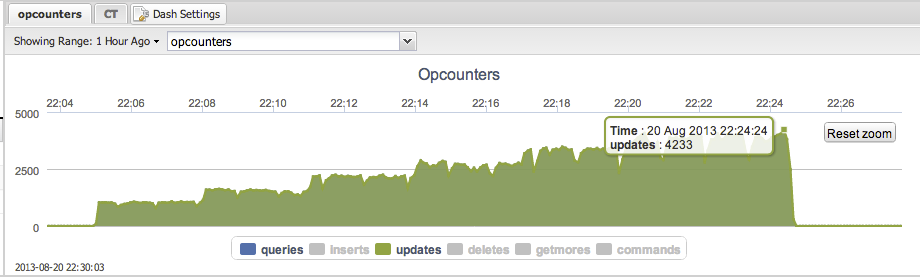

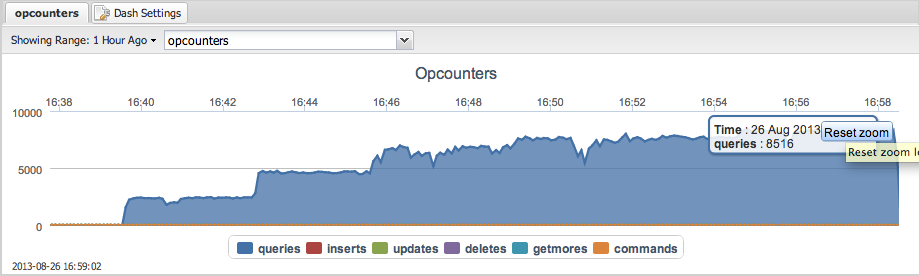

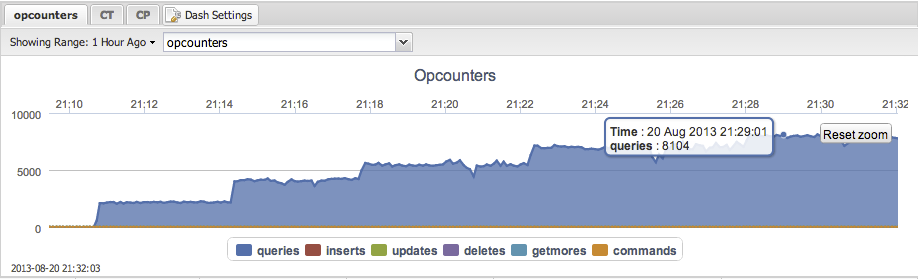

However there simply isn’t just a single metric that gives you the number of transactions in the cluster. You will have to add the opcounters together to get this number. The opcounters.command reflects the total number of read operations, so add this together with opcounters.insert,opcounters.update and opcounters.delete.

Journaling

You would easily confuse the total number of transactions with the dur.commits metric. Even though this metric’s name contains the word commit, this actually has nothing to do with the transactions.

Like with InnoDB in MySQL, MongoDB checkpoints modified data every 60 seconds. That leaves a potential gap of a maximum of 60 seconds to lose data when MongoDB receives an unclean shutdown. To resolve this, journaling has been enabled by default and this allows MongoDB to recover the transactions that are missing since the last checkpoint. The transactions will be written to the journal every interval configured in storage.journal.commitIntervalMs (100ms for WiredTiger).

For MMAP the dur.commits reflects the number of transactions written to the journal since last group commit.

mongo_replica_0:PRIMARY> db.serverStatus().dur.commits

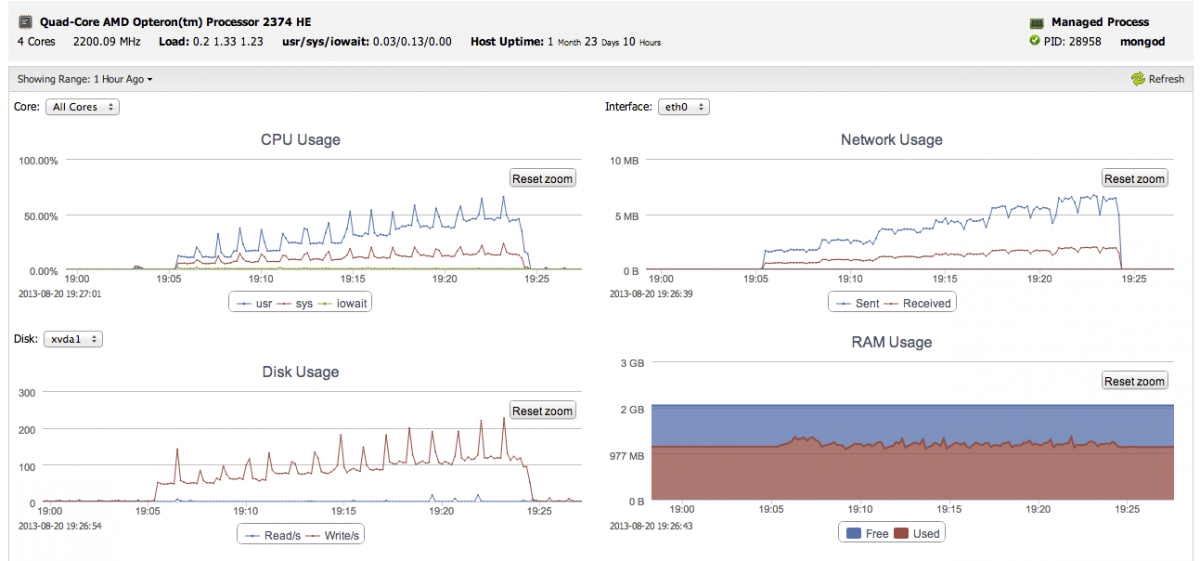

This metric will give you a lot of insight in the workload your server will handle. You can tune the commitIntervalMs parameter to a lower value to increase the frequency of group commits. Keep in mind that this also may increase I/O dramatically.

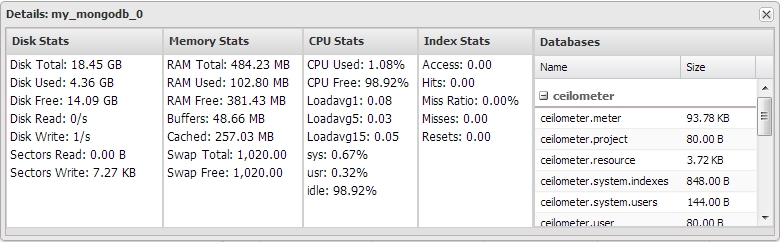

Memory usage

MongoDB uses memory for sorting, fetching, caching and also modifying data. Unlike MySQL, you actually have little influence on how much memory is used for what. The only influence you have is actually the memory used for the various storage engines.

Similar to the InnoDB bufferpool, the vast amount of memory will be consumed by either the MongoDB active memory or the WiredTiger cache. Both will work more efficiently the more memory you (can) give it.

To see how much memory MongoDB actually is using, you can fetch the mem metrics from the serverStatus:

mongo_replica_0:PRIMARY> db.serverStatus().mem

{

"bits" : 64,

"resident" : 401,

"virtual" : 2057,

"supported" : true,

"mapped" : 0,

"mappedWithJournal" : 0

}

To know how efficient your caching is can be interpreted by the number of page faults happening:

mongo_replica_0:PRIMARY> db.serverStatus().extra_info.page_faults

37912924

Every time MongoDB has a cache miss, and it has to retrieve data from disk it will count this as a page fault. A relatively high number of page faults, compared to the number of read operations, indicates the cache is too small. The metrics for cache statistics of WiredTiger are described below.

Detecting locks

MongoDB does support Global, Database and Collection level locking and will also report this in the serverStatus output:

mongo_replica_0:PRIMARY> db.serverStatus().locks

{

"Global" : {

"acquireCount" : {

"r" : NumberLong(2667294),

"w" : NumberLong(20),

"R" : NumberLong(1),

"W" : NumberLong(7)

},

"acquireWaitCount" : {

"r" : NumberLong(1),

"w" : NumberLong(1),

"W" : NumberLong(1)

},

"timeAcquiringMicros" : {

"r" : NumberLong(2101),

"w" : NumberLong(4443),

"W" : NumberLong(52)

}

},

"Database" : {

"acquireCount" : {

"r" : NumberLong(1333616),

"w" : NumberLong(8),

"R" : NumberLong(17),

"W" : NumberLong(12)

}

},

"Collection" : {

"acquireCount" : {

"r" : NumberLong(678231),

"w" : NumberLong(1)

}

},

"Metadata" : {

"acquireCount" : {

"w" : NumberLong(7)

}

},

"oplog" : {

"acquireCount" : {

"r" : NumberLong(678288),

"w" : NumberLong(8)

}

}

}

In principle in MongoDB you should not see much locking happening as these locks are comparable global, schema and table type of locks. The document level locking is missing here as these locks are handled by the storage engine used. In the case of MMAPv1 ( < MongoDB v3.0) the locks will happen on database level.

You should collect each and every one of these metrics as they might help you find performance issues outside the storage engines.

WiredTiger

Locks and concurrency

As described in the previous section, the document level locking is handled by the storage engine. In the case of WiredTiger, it has locks to prevent one thread from writing to the same document as another thread. When a write occurs, a ticket is created to perform the write operation, where the ticket is comparable to a thread.

The number of concurrent transactions are reflected in the wiredTiger.concurrentTransactions metric:

mongo_replica_0:PRIMARY> db.serverStatus().wiredTiger.concurrentTransactions

{

"write" : {

"out" : 0,

"available" : 128,

"totalTickets" : 128

},

"read" : {

"out" : 0,

"available" : 128,

"totalTickets" : 128

}

}

This metric is important because of two reasons: if you see a sudden increase in the write.out tickets, there is probably a lot of document locking going on. Also if the read.available or write.available metrics are nearing zero your threads are getting exhausted. The result will be that new incoming requests are going to be queued.

Transactions

In contrary to the default MongoDB metrics, the WiredTiger output in serverStatus does contain information about transactions.

mongo_replica_0:PRIMARY> db.serverStatus().wiredTiger.transaction

{

"number of named snapshots created" : 0,

"number of named snapshots dropped" : 0,

"transaction begins" : 21,

"transaction checkpoint currently running" : 0,

"transaction checkpoint generation" : 4610,

"transaction checkpoint max time (msecs)" : 12,

"transaction checkpoint min time (msecs)" : 0,

"transaction checkpoint most recent time (msecs)" : 0,

"transaction checkpoint total time (msecs)" : 6478,

"transaction checkpoints" : 4610,

"transaction failures due to cache overflow" : 0,

"transaction range of IDs currently pinned" : 1,

"transaction range of IDs currently pinned by a checkpoint" : 0,

"transaction range of IDs currently pinned by named snapshots" : 0,

"transaction sync calls" : 0,

"transactions committed" : 14,

"transactions rolled back" : 7

}

Metrics to keep an eye on are the trends in begins,committed and rolled back.

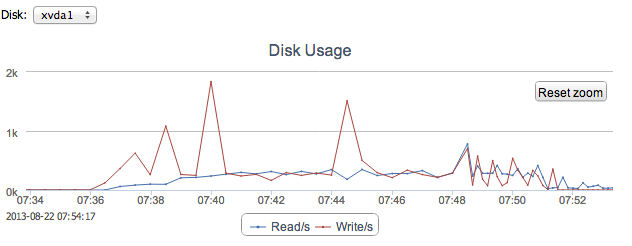

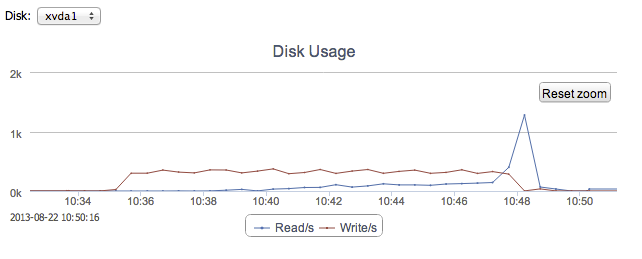

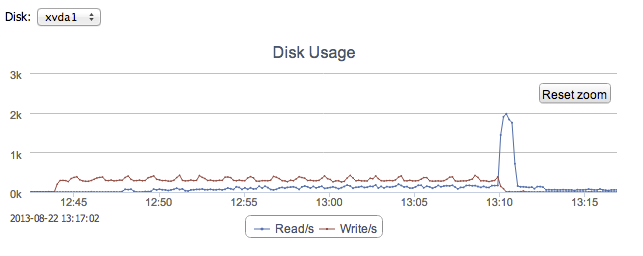

At the same time you can extract the checkpoint max time,checkpoint min time and checkpoint most recent time here. If the checkpointing time taken starts to increase WiredTiger isn’t able to checkpoint the data as quickly as before. It would be best to correlate this with disk statistics.

Cache

For WiredTiger the cache metrics are well accounted for, and these are the most important ones:

mongo_replica_0:PRIMARY> db.serverStatus().wiredTiger.cache

{

"bytes currently in the cache" : 887889617,

"modified pages evicted" : 561514,

"tracked dirty pages in the cache" : 626,

"unmodified pages evicted" : 15823118

}

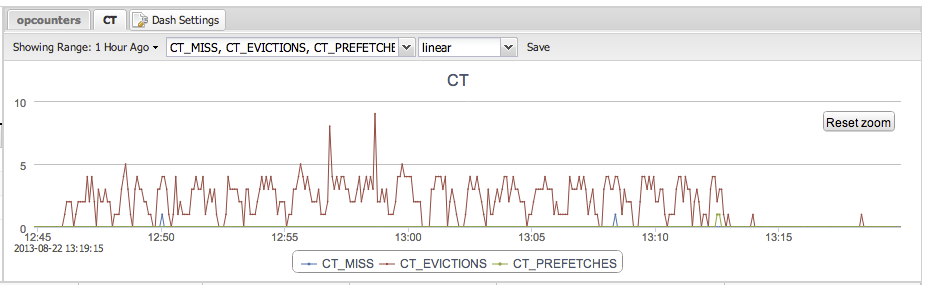

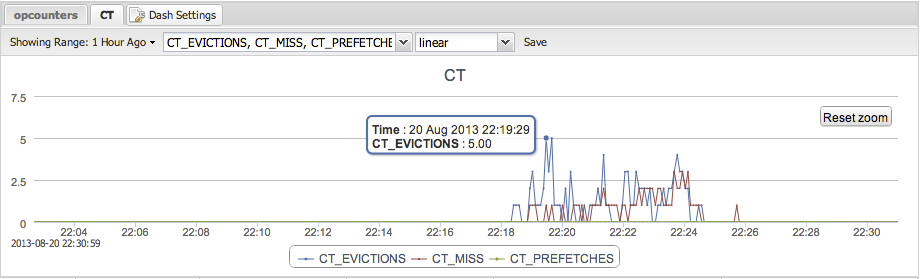

The evictions, both modified and unmodified, should be monitored. Evictions will happen once new data has to be retrieved from disk, and the least recently used (LRU) item will be removed from the cache. Increases in eviction rates means the data in the cache is never read back and thus evicted from the cache. If the evictions mostly happen on unmodified pages it means you can better cache them outside MongoDB. f the evictions happen mostly on the modified pages, the write workload is simply too big.

Conclusion

With this blog post you should be able to monitor and trend the most important metrics of MongoDB and WiredTiger. In a future blog post we will uncover more of the WiredTiger, MMAP and MongoRocks specifics. Our next post will be about Backup and Recovery.